Ask Indegene (Beta)

Hello, how can I help you today?

You may type your question or choose from the options below:

Thank you!

We'll be in touch. In the meantime, feel free to keep exploring!

18 Nov 2025

Executive Summary

Across the life sciences ecosystem, organizations are working to design more robust clinical trials, improve diagnostic precision, and enhance patient outcomes. Commercial and marketing teams aim to adapt to dynamic markets and optimize omnichannel engagement strategies, while content teams strive to deliver personalized experiences for diverse audiences. Artificial Intelligence (AI) is turning these ambitions into achievable outcomes.

However, unlike consumer technology, the stakes are far higher in life sciences industries. Every AI-generated recommendation, prediction, or decision can directly impact patient safety, medical outcomes, and regulatory compliance. To address these risks, the EU AI Act, implemented by the European AI Office, and similar regulations worldwide are setting up the tone for responsible AI development and deployment. Without clear guidelines for human-AI interaction, even the most advanced tools can introduce serious risks for both organizations and patients.

At its core, human-centered AI in healthcare must amplify human expertise, not replace it. Too often, organizations have adopted AI as a “magic wand,” only to realize that it fails to align with real-world life sciences workflows. The challenge is clear: how do we build trustworthy AI in healthcare that is safe, reliable, and compliant, while empowering clinicians rather than constraining them?

This paper outlines key policies, guidelines, and design practices for building safe, reliable, and human-centered AI systems in life sciences. It also introduces Indegene’s Human-AI Interaction (HAI) framework, which is a practical and scalable model for ensuring AI reliability and trustworthiness.

In the years ahead, the organizations that lead will not be those with the most AI tools, but those that can design safe, reliable, and human-centered AI experiences that clinicians and patients trust.

The High-Stakes Reality of AI in Life Sciences

AI in life sciences does not operate in a low-risk environment. Every output carries implications that go far beyond efficiency or convenience, directly affecting lives, safety, and organizational integrity. Several factors contribute to the high-stakes nature of AI deployment in this domain.

Clinical Accuracy

In clinical settings, even minor model errors can have significant downstream consequences: missed or delayed diagnoses, incorrect risk stratification, or inappropriate therapy recommendations. A 2025 meta-analysis of generative AI models used for diagnostic tasks reported an overall diagnostic accuracy of 52.1%. While AI performance was comparable to non-expert physicians, it lagged expert clinicians. These findings reinforce the need to enhance AI reliability through enriched datasets, better training methods, and seamless integration into clinician workflows rather than expecting clinicians to adapt to rigid AI systems.

Patient Safety

AI can improve the speed and sensitivity of disease detection, but it also introduces novel safety risks. Overconfidence, spurious outputs, and context mismatch can lead to clinically unsafe recommendations. Several early clinical AI deployments revealed unsafe or incorrect treatment suggestions during internal reviews, emphasizing why human oversight, validation, and responsible governance remain central to trustworthy AI in healthcare.

Regulatory Compliance

The life sciences industry operates under stringent regulatory oversight. Frameworks such as HIPAA, GDPR, FDA and EMA guidance, and ICH norms demand traceability, validation, and responsible handling of sensitive health data. The EU AI Act, implemented by the European AI Office, is the first comprehensive legal framework addressing the unique risks of AI systems. It establishes a risk-based approach emphasizing transparency, documentation, auditability, governance, and data quality which are all core elements of a trustworthy AI framework.

Reputational Risk

Beyond patient harm and compliance penalties, AI failures can carry lasting reputational damage. High-profile incidents involving flawed AI recommendations have eroded trust among clinicians, regulators, and patients. Once credibility is lost, health systems and vendors face longer adoption cycles, lost business opportunities, and increased regulatory scrutiny.

Challenges with Human-AI Interaction in Life Sciences

Despite rapid advances, the interaction between humans and AI remains one of the most critical gaps in achieving safe and reliable deployment.

AI models that perform well in controlled research environments often underperform in real-world healthcare settings. A narrative review found that such models frequently disrupt clinical workflows and fail to account for variations in patient populations, staffing, and infrastructure.

A human-centered AI evaluation of a deep learning system for detecting diabetic retinopathy in Thailand demonstrated this challenge. The system required extremely high-quality retinal images to function, but in real clinical environments, nurses struggled to capture consistently acceptable images. As a result, the AI rejected numerous inputs, forcing clinicians to devise workarounds that compromised usability and efficiency.

Read how AI is reshaping design systems in life sciences organizations.

Indegene’s Human-AI Interaction (HAI) Framework for Life sciences

To make AI adoption safe, practical, and trustworthy, Indegene has developed a Human-AI Interaction (HAI) Framework, a structured approach that bridges ethical principles, regulatory requirements, and real-world clinical practice.

Built on three interconnected layers, this framework translates the philosophy of human-centered AI into tangible design and operational guidance:

Policies: Core principles defining what must always be true—our non-negotiable constraints and obligations.

Guidelines: Actionable rules that guide how to design and deliver policy-compliant, trustworthy AI experiences.

Design Patternsand Examples: A continuously evolving library of proven interaction models that teams can adopt as practical references.

Together, these layers help organizations move beyond theoretical ethics to design AI systems that are transparent, safe, and aligned with the way HCPs work.

HAI Policies

At the foundation of this framework are policies designed to protect patient safety, ensure scientific integrity, and promote user confidence.

Clinical Accuracy and Safety First

AI must never compromise patient outcomes or scientific validity. Every output should be up to date, verifiable, and grounded in trusted data sources.

Transparency, Traceability, and Explainability

Each AI-generated insight should include clear, user-friendly explanations while maintaining comprehensive documentation, dataset lineage, and model logs for regulatory review and audit.

Automate Thoughtfully

Automate only where routine tasks are safe to do so. In all high-stakes contexts, human users must retain control and final decision-making authority.

Data Privacy and Regulatory Compliance

Every AI capability must actively protect sensitive patient and proprietary data, in compliance with HIPAA, GDPR, and other domain-specific standards.

Fit AI to Real Human Workflows

AI systems should adapt to existing clinical processes, not disrupt them. Datasets and models must be diverse, bias-tested, and grounded.

This foundation ensures that AI in life sciences is not only powerful but also reliable, ethical, and aligned with real human expertise.

HAI Guidelines

While policies define the “what” and “why,” guidelines define the “how”. This translates ethical intent into practical design. These guidelines for human-AI interaction ensure that AI is understandable, trustworthy, and supportive of workflows.

Communicate Capabilities and Limits Clearly: Set accurate expectations by explaining what the AI can and cannot do, its reliability, and expected benefits, without overwhelming users with technical jargon.

Start with the User's Workflow: Enable users to configure or select options familiar to their goals and ways of working.

Enable Safe Learning and Testing: Offer guided demos, reversible test runs, or sandbox environments so users can evaluate risks and rewards before adoption.

Time Suggestions to Context: Deliver insights or recommendations only when relevant to the user's immediate task. Keep information concise and in plain language.

Keep Users in Control: Allow users to review, edit, or reverse AI outputs before they take effect.

Explain Decisions Simply: Provide brief, intuitive explanations that outline the reasoning behind each recommendation.

Support High-Risk Tasks: For actions with safety or regulatory implications, offer built-in guardrails, validations, and extra confirmations.

Explain Outcomes Clearly: Summarize the process, data sources, and rationale behind final outputs.

Provide Error Handling and Auditability: Present errors in simple terms, suggest corrective actions, and generate exportable audit trails for compliance.

Encourage Exploration: Help users discover related topics or evidence to enhance research and decision-making.

Learn from Feedback: Incorporate user feedback and behavioral data to improve system accuracy, always transparently communicating what has changed and allowing opt-out or reversion.

Update Cautiously: Deploy model, data, or UX updates gradually, with proactive communication explaining their impact on outcomes.

By embedding these human-centered AI principles into everyday workflows, organizations can achieve a balance between innovation, safety, and usability, creating AI systems that clinicians and regulators can truly trust.

Making HAI Actionable Through User-Centered Design in Life Sciences

At Indegene, our HAI guidelines are embedded into a user-centered design process that makes AI both powerful and practical. This ensures that AI systems remain intuitive, transparent, and trustworthy in life sciences.

The process follows five key stages:

Discover and empathize with users

Understand user goals, challenges, and workflows.Define the problem

Frame user and business needs with clarity.Ideate and explore

Brainstorm and map multiple solution paths.Prototype and test

Build and evaluate interactions within real-world workflows.Refine and prepare for scale

Incorporate feedback, improve usability, and validate compliance.Within this process, policies and guidelines act as the rules of engagement, from how information is presented to the level of user control and the methods for building trust. Features define what the software can do; guidelines define how it should behave. The true value of HAI lies in combining both capabilities with guardrails to deliver safe, reliable, and human-centered AI experiences.

Practical Applications

Clinical Trial Cohort Selection

Before HAI Guidelines

The tool automatically selected candidate participants from patient records.

Read more

After HAI Guidelines

The tool now provides the rationale for its recommendations, displays confidence levels, maintains an exportable audit trail, and requires clinician approval before outreach, enhancing both trust and compliance.

Scientific Translation

Before HAI Guidelines

The tool translated scientific abstracts into local languages in one step.

Read more

After HAI Guidelines

The tool now produces a draft translation, highlights uncertain terminology, links to the original source text, and prompts expert review before publication, ensuring accuracy and accountability.

Design Principles for Responsible AI in Life Sciences

Transparency and Explainability

As noted earlier, explaining how AI systems make decisions is critical to avoid creating a black box. However, transparency is not one-size-fits-all. It requires careful consideration of what information is truly valuable to users. In some cases, showing too much of the AI’s internal logic can overwhelm or confuse users, negatively affecting usability.

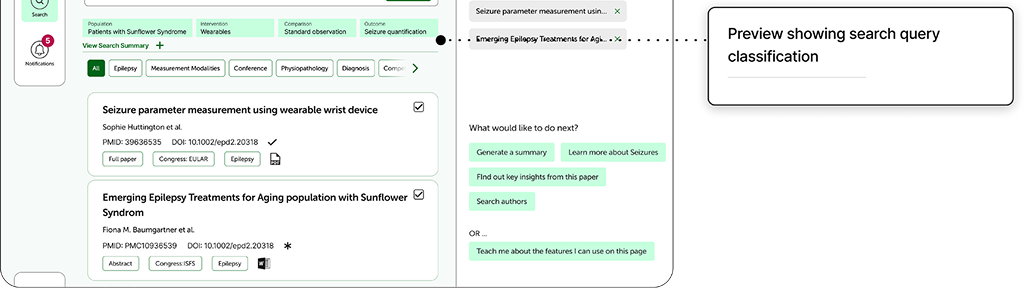

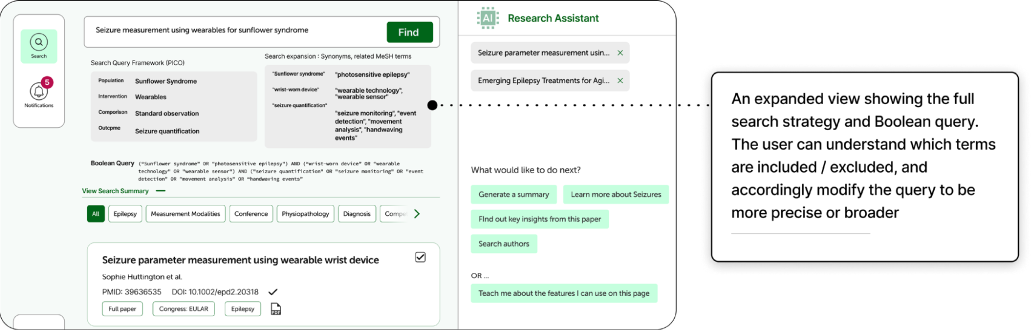

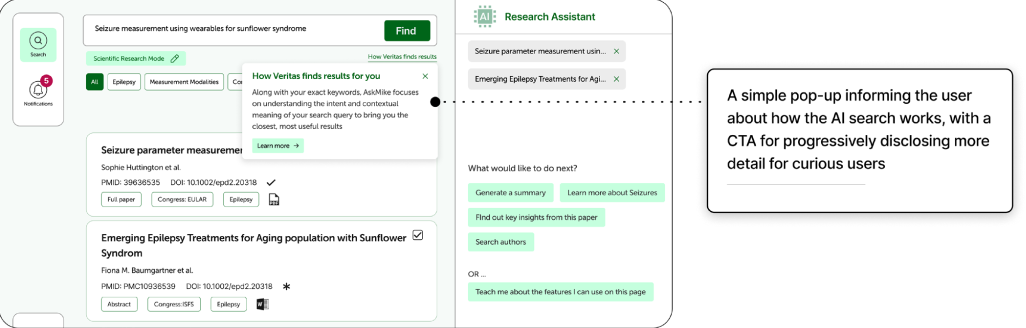

Indegene Case Study

Indegene designed a research tool for scientific users that features a repository of literature and an AI-powered search. Initially, we experimented with an AI-assisted query builder that converted a user’s research objective into a complex Boolean query, adding synonyms and relevant parameters automatically.

To maintain transparency, we implemented an expandable, contextual explanation showing how each query was processed which is specific to the user’s input and presented in simple language.

As the project evolved, collaboration with our GenAI team led to an upgrade from Boolean-based to semantic search, which uses NLP and machine learning to interpret intent. This shift changed how we approached explainability. In semantic search, detailing every step of the model’s reasoning offers little practical value to end users. Instead, we adopted a layered transparency approach which includes providing a concise, general explanation of how semantic search works and using progressive disclosure to guide curious users to more detailed documentation.

This balance ensures that users gain trust and clarity without being burdened by unnecessary complexity.

Understanding User Needs

The foundation of any successful AI system lies in accurately identifying real user needs. The key questions to start with are:

What problem are we solving?

Who is the user, and what is their true goal?

Where can AI add meaningful value?

User insights directly inform data collection and model design. Evaluating user diversity and identifying potential biases, including underrepresented or marginalized groups, to help create datasets that are comprehensive, balanced, and inclusive.

It is essential to document and track datasets carefully, iteratively improve model performance through continuous updates, and maintain transparency in data lineage. This user-driven data strategy ensures that AI systems remain both equitable and effective.

Risk Management and Monitoring

AI deployment is not a one-time event and demands continuous oversight. Establishing a robust risk management and monitoring framework is crucial to maintaining reliability and compliance.

This includes:

Ongoing hazard identification, mitigation, and verification.

Monitoring real-world system performance through defined KPIs.

Periodic model evaluations to identify drifts or degradations.

Procedures for reporting and addressing serious incidents promptly.

By continuously assessing and refining performance, organizations can ensure their AI systems remain safe, reliable, and aligned with human and regulatory expectations.

Human-AI Interaction as a Strategic Advantage

Adopting a trustworthy AI framework delivers measurable business value for life sciences organizations. This includes:

Reduce regulatory and clinical risk through clearer audit trails and fewer compliance lapses.

Increase trust and adoption as reviewers gain confidence in transparent, user-controlled systems.

Shorten review cycles with consistent, auditable outputs that accelerate approvals.

Improve outcomes by enhancing evidence of synthesis, verification, and decision-making quality.

Leverage AI innovation safely through thoughtfully designed, human-centered AI experiences.

As AI capabilities advance, the organizations that succeed will be those that embed trust, transparency, and user-centered design into their systems from the start. Guidelines for human-AI interaction in life sciences will serve as strategic enablers for scaling innovation responsibly.

Strategic Applications of AI in Life Sciences

AI is reshaping how life sciences organizations operate, enhancing reliability, speed, and personalization across clinical research, medical education, and patient engagement.

Accelerating Drug Discovery : From clinical trial design to manufacturing optimization, AI accelerates innovation in data-heavy environments. One such example is DeepMind’s AlphaFold, which predicts protein structures with unprecedented accuracy and has set the stage for a deeper understanding of disease mechanisms and faster development of targeted therapies for both rare and common disease.

Enabling Early Disease Detection : AI is proving especially transformative in early disease detection, where timely diagnosis can significantly improve patient outcomes. By integrating diverse data sources such as imaging, genomics, and electronic health records, AI models can detect subtle patterns that precede clinical symptoms. Various efforts are underway to improve sensitivity, specificity, and AI-clinician collaboration to reduce mortality and treatment costs.

Optimizing Omnichannel Engagement : For HCPs, time is limited, and engagement channels are fragmented. AI enables life sciences companies to optimize omnichannel strategies by analyzing large datasets on HCP behavior, automating next-best-action recommendations, and delivering personalized outreach. This creates more efficient and relevant engagement, balancing in-person interactions with AI-enhanced digital experiences.

Personalizing Content at Scale: AI enables scalable personalization across audiences:

For HCPs, AI tailors content by specialty, region, or certification needs (e.g., state-specific CME modules).

For patients, it simplifies complex information, improving health literacy, and engagement.

For marketers, it optimizes content for generative search engines, enhancing visibility and ROI.

By aligning trustworthy AI frameworks with human-centered design, life sciences organizations can create content that is accurate, relevant, and discoverable, while maintaining compliance and reliability.

Charting the Next Phase of AI: AI will continue to evolve into multimodal systems capable of learning from both structured and unstructured data such as imaging, EHR, genomic, and behavioral information. Life sciences organizations will increasingly co-develop AI solutions with technology partners, especially in precision therapeutics and connected care.

This will help realize the vision of the personalized, preventive, and data-driven life sciences industry that improves outcomes and lowers costs. The future of trustworthy AI in healthcare depends on frameworks that balance innovation with safety, transparency, and ethical responsibility.

From Framework to Action: Making Human-Centered AI Real

AI’s rapid evolution often clashes with the deliberate pace of life sciences where fields like patient safety, compliance, and ethics cannot be compromised. Success requires a strategy that combines accurate diagnosis, clear policies, and actionable implementation.

At Indegene, we bring these elements together through our HAI Framework:

Diagnosis: Our user-centered design approach uncovers real user needs and identifies where AI can add value without disrupting workflows.

Policies: Our HAI Policies establish guardrails for safe, compliant, and ethical AI design.

Actionable Steps: Our growing library of HAI Guidelines, Design Patterns, and examples provides teams with practical steps to humanize AI systems.

We continue to evolve our framework with each engagement, helping life sciences organizations implement reliable, safe, and usable human-centric AI experiences. With deep domain expertise and proven frameworks, we enable life sciences organizations to harness AI responsibly while driving measurable business impact. Contact us to learn more.

Let's Partner to Commercialize with Confidence