Share this blog

29 May 2025

Machine learning (ML) is, undoubtedly, reshaping the life sciences industry, and helping organizations unlock deeper insights from real-world data to guide smarter decisions. From predicting patient non-adherence to optimizing HCP engagement, ML models offer vast opportunity across patient services, commercial operations, and clinical development. Yet despite their promise, many initiatives falter at the point of deployment. Models that excel in controlled settings often underperform in production environments—where they must integrate with real-time data pipelines, meet regulatory demands, and operate reliably over time.

Here, we explore how MLOps, a discipline focused on operationalizing machine learning, helps life sciences organizations bridge that gap. Read on to discover what it takes to transform experimental ML models into enterprise-ready systems.

The deployment dilemma

In practice, many ML initiatives stall when moving from prototype to production. Models that perform well in isolated notebooks often fail under real-world conditions due to several factors.

Inconsistent environment

Development and production environments often differ in configuration, libraries, or runtime dependencies, which can cause compatibility issues when deploying ML models.

In life sciences, these issues are magnified by the regulatory environment. Models used to support patient decisions, clinical recommendations, or market access strategies must be explainable, traceable, and reproducible.

Without a structured operational framework, even the most sophisticated ML models offer little value beyond the lab.

Machine learning operations to the rescue

MLOps—short for Machine Learning Operations—is the discipline of managing the end-to-end lifecycle of ML systems in a way that is scalable, secure, and reliable. It introduces engineering rigor into data science workflows, and allows organizations to move from experimentation to deployment in a controlled and governed manner.

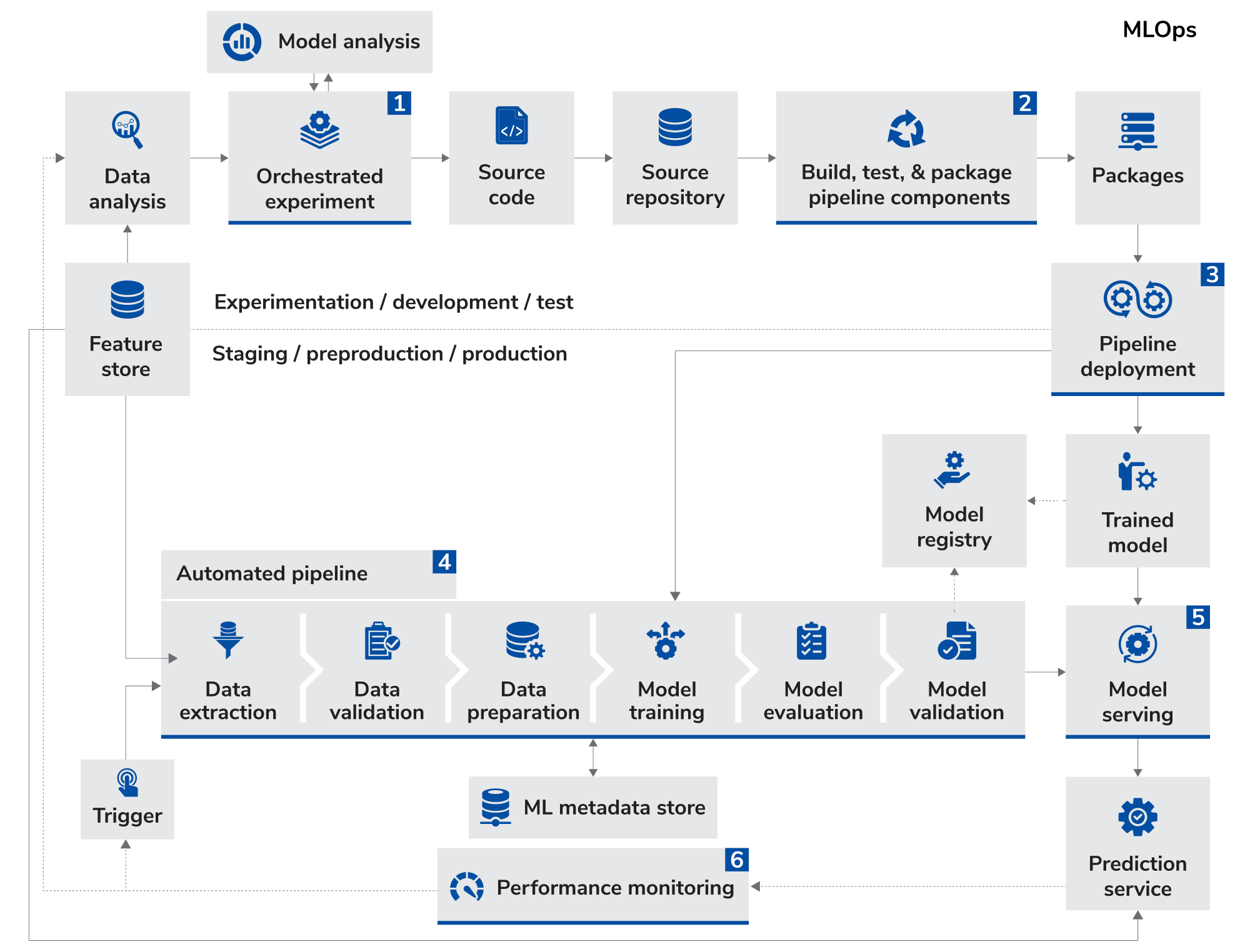

A mature MLOps framework automates the critical stages of the machine learning lifecycle, including data ingestion, feature pipeline versioning, model training, validation, deployment, and ongoing monitoring. It enables continuous improvement by systematically retraining models with new data and pushing updated models into production. At the same time, it maintains complete audit trails to ensure reproducibility, regulatory compliance, and reliable, scalable operations in real-world environments.

More importantly, it allows cross-functional teams including, data scientists, IT, compliance, and business stakeholders to collaborate effectively on a shared platform, with clear controls over what gets deployed and how.

Key components of a mature, scalable MLOps framework

A mature MLOps framework is built on several critical pillars that ensure machine learning systems remain reliable, scalable, and continuously improving in live environments.

Data and feature management

Reliable models start with reliable data. A strong MLOps framework automates data engineering workflows to transform raw clinical, claims, and engagement data into structured, versioned feature sets. These pipelines are governed by strict version control and auditing mechanisms to maintain consistency between the features used during training and those applied in production scoring.

Maintaining full traceability—from raw data sources to final feature tables—strengthens transparency. This is essential in high-stakes applications like patient stratification or real-world evidence generation, where downstream decisions critically depend on the integrity and lineage of the underlying data.

Image source: Google Cloud-MLOPs

Business impact: Real-world MLOps use cases at a glance

Scalable machine learning operations directly accelerates time-to-value by reducing model deployment timelines from weeks to days. It builds lasting confidence among commercial and clinical teams by keeping predictions consistently accurate, even as underlying conditions evolve.

For instance, in a patient support program, deploying an adherence prediction model within a governed MLOps pipeline could enable case managers to identify high-risk patients earlier. As patient behaviour data evolves, the model might retrain automatically, and help the team intervene sooner and potentially improve adherence rates and patient outcomes over time.

Similarly, a field force optimization model could guide next-best-action strategies for sales teams by adapting to real-time feedback and shifting market conditions. With continuous monitoring, insights might stay aligned with the realities in the field. This can increase trust among representatives and drive higher adoption compared to traditional static analytics approaches.

Operationalizing machine learning in life sciences is a game-changer

Building high-performing ML models is essential but without robust operationalization, even the most advanced algorithms fall short of their potential. In life sciences, where patient safety, regulatory compliance, and data integrity are paramount, mature MLOps practices ensure that models move seamlessly into production, remain trustworthy over time, and deliver consistent insights across the organization. By embedding automation, traceability, and adaptive learning into the model lifecycle, companies can accelerate decision-making, improve outcomes, and drive innovation securely and at scale.

Notably, operationalizing machine learning is not just about efficiency; it is about unlocking the full value of data-driven intelligence—securely, at scale, and with confidence in complex, high-stakes environments.

Share this blog

Get exclusive pharma

insights delivered to your inbox

Latest

Latest